This page gathers the extended abstracts from Topics 5 and 6 of the Compass Conference: Transferable Skills for Research & Innovation, 2023, October 4 – 5, Helsinki, Finland.

AGORA: Automated Generation of Test Oracles for REST APIs

Alonso, Juan C.

Corresponding author – presenter

javalenzuela@us.es; University of Seville, Spain

Segura, Sergio

Ruiz-Cortés, Antonio

University of Seville, Spain

Keywords: REST APIs, test oracles, automated testing

Test case generation tools for REST APIs have grown in number and complexity in recent years. However, their capabilities for automated input generation contrast with the simplicity of their test oracles (i.e., mechanisms for determining whether a test has passed or failed), limiting the types of failures they can detect. AGORA is an approach for the automated generation of test oracles for REST APIs through the detection of invariants (properties of the output that should always hold). AGORA aims to learn the expected behavior of an API by analyzing previous API requests and their corresponding responses. For this, we extended Daikon, a tool that applies machine learning techniques to dynamically detect likely invariants, including the definition of new types of invariants and the implementation of an instrumenter called Beet. Beet converts any OpenAPI specification and a collection of API requests to a format processable by Daikon.

AGORA achieved a total precision of 81.2% when tested on a dataset of 11 operations from 7 industrial APIs. AGORA revealed 11 bugs in APIs with millions of users: Amadeus, GitHub, Marvel, OMDb and YouTube. Our reports have guided developers in improving their APIs, including bug fixes and documentation updates in GitHub.

A preliminary version of this work obtained the first prize in the ESEC/FSE 2022 Student Research Competition (Alonso, Automated Generation of Test Oracles for RESTful APIs, 2022) and the second prize in the ACMSRC Grand Finals (Alonso, SRC Grand Finalists 2023, 2023). An extended version of this work has been accepted for publication in the ISSTA 2023 conference.

Background of the study

Web Application Programming Interfaces (APIs) allow heterogeneous software systems to interact over the network (Richardson, Amundsen, & Ruby, 2013). Companies such as Google, Microsoft and Apple provide web APIs to enable their integration into third-party systems. Most web APIs adhere to the REpresentational State Transfer (REST) architectural style, being known as REST APIs (Fielding, 2000). REST APIs are specified using the OpenAPI Specification (OAS) (OpenAPI Specification, 2023), which defines a standard notation for describing them in terms of operations, input parameters, and output formats.

Automated test case generation for REST APIs is a thriving research topic(Kim, Xin, Sinha, & Orso, 2022). Despite the promising results of existing proposals, they are all limited by the types of errors they can detect: uncontrolled server failures (labelled with a 500 status code) and disconformities with the API specification (e.g., the API response contains a field that is not present in the specification). Generally, there are dozens or hundreds possible test oracles for every API operation. For example, if we perform a search for songs in the Spotify API by setting a maximum number of results (limit parameter), the size of the response field containing an array of songs should be equal to or smaller than the value of limit. Currently, creating these test oracles is a costly and manual endeavour that requires domain knowledge.

Aim of the study

We present AGORA, the first approach for the automated generation of test oracles for REST APIs. AGORA is based on the detection of invariants, properties of the output that should always hold, e.g., a JSON propertyfrom the response should always have the same value as one of the input parameters. For this, we developed an extension (so-called instrumenter) of Daikon (Ernst, et al., 2007), a popular tool that uses machine learning techniques for dynamic detection of likely invariants. Generated invariants can be used as potential test oracles, and they can also help to identify unexpected behaviour (bugs) in the software under test.

Methodology

Figure 1 shows an overview of AGORA. At the core of the approach is Beet, a novel Daikon instrumenter. Beetreceives three inputs: 1) the OAS specification of the API under test, 2) a set of API requests, and 3) the corresponding API responses. As a result, Beet returns an instrumentation of the API requests consisting of a declaration file (describing the format of the API operations inputs and outputs) and a data trace file (specifying the values assigned to each input parameter and response field in each API call). This instrumentation is processed by our customized version of Daikon, resulting in a set of likely invariants that can be used as test oracles.

Figure 1: Workflow of AGORA

Beet has been implemented in Java and is open source. Our GitHub repository contains an exhaustive description of the instrumentation process with examples (Beet Repository, 2023).

To identify invariants that could be used as test oracles, we used a benchmark of 40 APIs (702 operations) systematically collected by the authors for a previous publication (Alonso, Martin- Lopez, Segura, Garcia, & Ruiz-Cortes, 2023).

We implemented 22 new invariants, suppressed 36 default Daikon invariants, and activated 9 invariants disabled by default in Daikon. This version of Daikon supports 105 distinct types of invariants, classified into five categories:

- Arithmetic comparisons (48 invariants). Specify numerical bounds (e.g., size(return.artists[]) >= 1) and relations between numerical fields.

- Array properties (23 invariants). Represent comparisons between arrays, such as subsets, supersets, or fields that are always member of an array (e.g., return.hotel.hotelId in input.hotelIds[]).

- Specific formats (22 invariants). Specify restrictions regarding the expected format (e.g., return.href is Url) or length of string fields.

- Specific values (9 invariants). Restrict the possible values of fields (e.g., return.visibility one of {“public”, “private”}).

- String comparisons (3 invariants).Specify relations between string fields, such as equality (e.g., input.name == return.name) or substrings.

We refer the reader to AGORA GitHub repository (Beet Repository, 2023) for a more detailed description of each invariant.

Results

For our experiments, we resorted to a set of 11 operations from 7 industrial APIs. For each operation, we automatically generated and executed API calls using the RESTest (Martin- Lopez, Segura, & Ruiz-Cortés, 2020) framework until obtaining 10K valid API calls per operation (110K calls in total). According to REST best practices (Richardson, Amundsen, & Ruby, 2013), we consider an API response as valid if it is labelled with a 2XX HTTP status code. We performed the following experiments to assess the effectiveness of AGORA for generating test oracles and detecting failures:

Experiment 1: Test oracle generation.

For each API operation, we randomly divided the set of automatically generated requests (10K) into subsets of 50, 100, 500, 1K and 10K requests. Then, we ran AGORA using each subset as input and computed precision by manually classifying the inferred invariants as true or false positives. True positive invariants describe properties of the output that should always hold and therefore are valid test oracles. A false positive reflects a pattern that has been observed in all the API requests and responses provided as input but does not represent the expected behavior of the API.

We compared the effectiveness of AGORA against the default version of Daikon in identifying likely invariants for REST APIs. In both cases, Beet was used to transform API specifications and requests into inputs for Daikon. With this experiment, we aim to answer the following research questions:

- RQ1: Effectiveness of AGORA to generate test oracles.

Table 1 shows the results for each API operation, set ofAPI requests (50, 100, 500, 1K, 10K) and approach (AGORA vs default Daikon). The columns labelled with “I” and “P” represent the number of likely invariants detected and the total precision, respectively.

When learning from the whole dataset, AGORA obtained a total precision of 81.2%, whereas the original configuration of Daikon achieved 51.4%. AGORA outperformed the default configuration of Daikon in all the API operations.

- RQ2: Impact of the size of the input dataset to the precision of AGORA.

The test suites of 50 requests seem to offer the best trade-off between effectiveness and cost of generating API requests. The total precision of AGORA improved from 73.2% with 50 API requests, to 81.2% with the complete dataset.

| API – Operation | 50 API calls | 100 API calls | 500 API calls | 1K API calls | 10K API calls | |||||||||||||||

| DaikonI P(%) | AGORAI P(%) | DaikonI P(%) | AGORAI P(%) | DaikonI P(%) | AGORAI P(%) | DaikonI P(%) | AGORAI P(%) | DaikonI P(%) | AGORAI P(%) | |||||||||||

| AmadeusHotel-hotelOffers | 109 | 21.1 | 117 | 52.1 | 136 | 16.9 | 114 | 56.1 | 116 | 22.4 | 108 | 64.8 | 107 | 24.3 | 107 | 66.4 | 99 | 26.3 | 106 | 67.9 |

| GitHub-createOrgRepo | 82 | 95.1 | 198 | 98 | 82 | 95.1 | 198 | 98 | 80 | 96.2 | 198 | 98.5 | 80 | 96.2 | 198 | 98.5 | 80 | 96.2 | 198 | 98.5 |

| GitHub-getOrgRepos | 45 | 40 | 150 | 84.7 | 40 | 45 | 147 | 88.4 | 39 | 46.2 | 149 | 87.9 | 39 | 46.2 | 150 | 88 | 38 | 47.4 | 148 | 89.2 |

| Marvel-comicById | 178 | 29.8 | 115 | 47.8 | 194 | 28.9 | 127 | 46.5 | 178 | 33.7 | 119 | 52.1 | 167 | 35.9 | 106 | 58.5 | 140 | 45.7 | 96 | 65.6 |

| OMDB-byIdOrTitle | 7 | 57.1 | 16 | 93.8 | 7 | 57.1 | 16 | 93.8 | 7 | 57.1 | 16 | 93.8 | 8 | 50 | 17 | 88.2 | 7 | 57.1 | 16 | 93.8 |

| OMDB-bySearch | 4 | 100 | 5 | 100 | 7 | 57.1 | 7 | 71.4 | 5 | 80 | 6 | 83.3 | 5 | 80 | 6 | 83.3 | 6 | 83.3 | 7 | 85.7 |

| Spotify-createPlaylist | 22 | 100 | 41 | 100 | 22 | 100 | 41 | 100 | 22 | 100 | 41 | 100 | 22 | 100 | 41 | 100 | 22 | 100 | 41 | 100 |

| Spotify-getAlbumTracks | 46 | 45.7 | 68 | 85.3 | 45 | 46.7 | 67 | 86.6 | 42 | 50 | 66 | 87.9 | 42 | 50 | 66 | 87.9 | 41 | 53.7 | 66 | 89.4 |

| Spotify-getArtistAlbums | 53 | 43.4 | 55 | 81.8 | 49 | 49 | 52 | 88.5 | 35 | 68.6 | 50 | 92 | 32 | 75 | 50 | 92 | 31 | 83.9 | 52 | 92.3 |

| Yelp-getBusinesses | 60 | 28.3 | 30 | 40 | 55 | 30.9 | 33 | 36.4 | 46 | 37 | 25 | 48 | 45 | 37.8 | 23 | 52.2 | 41 | 39 | 22 | 50 |

| YouTube-listVideos | 228 | 31.6 | 194 | 57.2 | 227 | 32.2 | 199 | 56.3 | 218 | 35.8 | 191 | 62.3 | 225 | 36 | 200 | 61.5 | 201 | 41.3 | 196 | 65.3 |

| TOTAL | 834 | 40.2 | 989 | 73.2 | 864 | 39.4 | 1001 | 73.5 | 788 | 44.5 | 969 | 77.8 | 772 | 45.9 | 964 | 78.8 | 706 | 51.4 | 948 | 81.2 |

Table 1: Test oracle generation. I=”Number of likely invariants”, P=”Precision (% valid test oracles)”

Experiment 2: Failure detection.

To evaluate the effectiveness of the generated test oracles in detecting failures in the APIs under test, we systematically seeded errors in API responses using JSONMutator (JSONMutator, 2023), a tool that applies different mutation operators on JSON data, e.g., removing an array item.

For each API operation, we transformed the test oracles derived from the set of 50 test cases into executable assertions. Then, for each API operation, we randomly selected 1K API responses from the set of 10K test cases generated by RESTest.

We used JSONMutator to introduce a single error on each API response. Then, we ran the assertions and marked the failure as detected if at least one of the test assertions (i.e., test oracles) was violated. We repeated this process 10 times per operation to minimize the effect of randomness computing the average percentage of failures detected.

- RQ3: Effectiveness of the generated test oracles for detecting failures.

Table 2 shows the number of test assertions (i.e., test oracles) and the Failure Detection Ratio per API operation. Overall, test oracles generated by AGORA identified 57.2% of the failures.

| API – Operation | Assertions | FDR (%) |

| AmadeusHotel-getMultiHotelOffers | 61 | 60 |

| GitHub-createOrganizationRepository | 194 | 92.3 |

| GitHub-getOrganizationRepositories | 127 | 62.9 |

| Marvel-getComicById | 55 | 37.7 |

| OMDB-byIdOrTitle | 15 | 36.5 |

| OMDB-bySearch | 5 | 20.6 |

| Spotify-createPlaylist | 41 | 84.8 |

| Spotify-getAlbumTracks | 58 | 70.6 |

| Spotify-getArtistAlbums | 45 | 76.5 |

| Yelp-getBusinesses | 12 | 23.5 |

| YouTube-listVideos | 111 | 64.2 |

| TOTAL | 724 | 57.2 |

Detected Faults

AGORA detected 11 domain specific bugs in 7 operations from 5 APIs with millions of users worldwide. For example, one of the reported invariants in the Amadeus Hotel API led to the identification of 55 hotel room offers with zero beds (return.room.typeEstimated.beds>=0). When performing a search in YouTube using theregionCode input parameter, the returned videos must be available in the provided region. However, a violation of the invariant input.regionCode inreturn.contentDetails.regionRestriction.allowed[], led us to detect 81 cases in which the returned videos were not available in the provided region. These errors have been confirmed by the developers.

Conclusions

We present the following original research and engineering contributions:

- AGORA, a black-box approach for the automated generation of test oracles for REST APIs.

- Beet, a Daikon instrumenter for REST APIs readily integrable into existing test case generation tools for REST APIs.

- A version of Daikon supporting the detection of 105 distinct types of invariants in REST APIs.

- An empirical evaluation in terms of precision and failure detection in 11 operations from 7 industrial APIs, including reports of 11 real-world bugs.

Future lines of work include:

- Automated generation of assertions from the reported invariants. This extension will be performed during a research stay with Professor Michael Ernst, creator of Daikon.

- Deploying AGORA to ease its integration into other applications. We have been contacted by Schemathesis (Schemathesis, 2023), a company that offers testing as a service of REST APIs, to implement AGORA into their framework.

References

Alonso, J. (2022). Automated Generation of Test Oracles for RESTful APIs. Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, (pp. 1808–1810). Singapore.

Alonso, J. (2023). SRC Grand Finalists 2023. Retrieved from https://src.acm.org/grand- finalists/2023

Alonso, J., Martin-Lopez, A., Segura, S., Garcia, J., & Ruiz-Cortes, A. (2023). ARTE: Automated Generation of Realistic Test Inputs for Web APIs. IEEE Transactions on Software Engineering, 348 – 363.

Beet Repository. (2023). Retrieved from https://github.com/isa-group/Beet

Ernst, M., Perkins, J., Guo, P., McCamant, S., Pacheco, C., Tschantz, M., & Xiao, C. (2007). The Daikon system for dynamic detection of likely invariants. Science of Computer Programming, 35-45.

Fielding, R. T. (2000). Architectural Styles and the Design of Network-based Software Architectures. University of California, Irvine.

JSONMutator. (2023). Retrieved from https://github.com/isa-group/JSONmutator

Kim, M., Xin, Q., Sinha, S., & Orso, A. (2022). Automated Test Generation for REST APIs: No Time to Rest Yet. Proceedings of the 31st ACM SIGSOFT International Symposium on Software Testing and Analysis, (pp. 289–301). Virtual, South Korea.

Martin-Lopez, A., Segura, S., & Ruiz-Cortés, A. (2020). RESTest: Black-Box Constraint- Based Testing of RESTful Web APIs. International Conference on Service-Oriented Computing, (pp. 459-475).

OpenAPI Specification. (2023). Retrieved from https://www.openapis.org

Richardson, L., Amundsen, M., & Ruby, S. (2013). RESTful Web APIs. O’Reilly Media, Inc.

Schemathesis. (2023). Retrieved from https://schemathesis.io

Analysing opinions on the COVID-19 passport using Natural Language Processing (NLP)

Aguilar Moreno, J.A.

Corresponding author – presenter

e-mail: juanantonio892@gmail.com

Universidad de Sevilla, Spain

Palos-Sanchez, P.R.

Universidad de Sevilla, Spain

Pozo-Barajas, R.

Universidad de Sevilla, Spain

Keywords: Natural Language Processing; Sentiment Analysis; Topics Analysis; RapidMiner; COVID-19 passport

Abstract

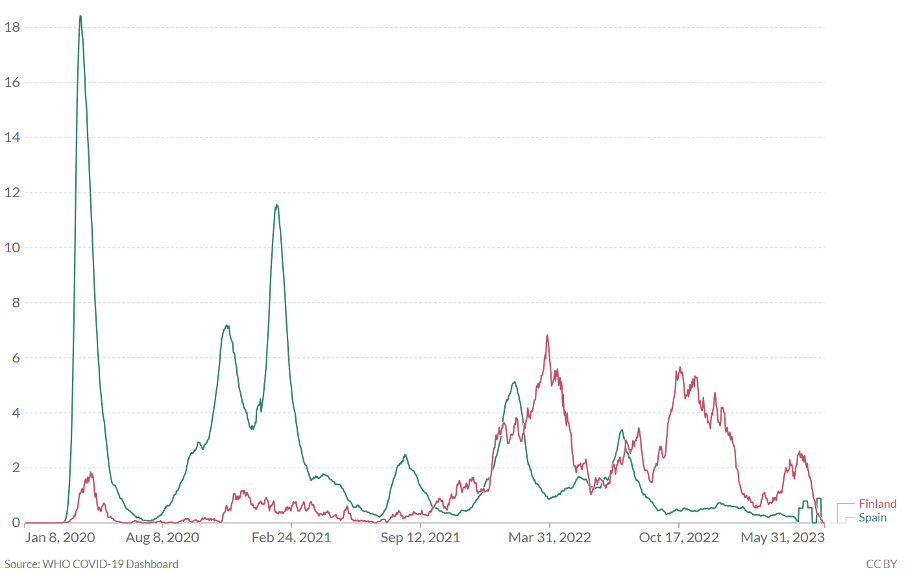

Social networks generate a large amount of information on a daily basis that can be analysed to understand phenomena and implement improvements for society. This article uses natural language processing techniques to identify the general opinion of YouTube comments on videos about the implementation of the COVID-19 passport, one of the most controversial measures to contain the advance of the pandemic while reactivating the economy, the reasons for this and the aspects that generate the most interest.

The Rapidminer software is used to find the most frequent words, positive and negative feelings and a classification by topics.

Literature review

The gradual return to normality after the COVID-19 pandemic has been accompanied by measures to contain a further increase in the number of infections at the same time as the economy is reactivated, One of the most hotly debated is the introduction of the COVID-19 passport by the European Union, a document required for travel without the need for testing or quarantine and access to certain premises, including workplaces, which gives its holder a nominative accreditation of a higher level of immunity due to having recently overcome the disease or having been vaccinated with the required doses (Gstrein, 2021; Shin et al., 2021).

The political implications of COVID-19 passports are significant, requiring a balance between various interest groups and addressing ethical concerns such as health system inequities and personal choice (Sharun et al., 2021). Previous research analyzing Twitter opinions found a majority with favorable attitudes towards the passports, although concerns centered on the lack of consensus for a standardized certificate and ethical considerations like the digital divide and freedom (Khan et al., 2022). Conversely, a study conducted through focus group interviews revealed that the drawbacks outweighed the benefits, highlighting concerns about temporary immunity, virus mutation, and ethical issues (Fargnoli et al., 2021). Supporters emphasized economic recovery and a return to normalcy.

Aim of the study, originality and novelty

The central objective of this work is to identify the general opinion on the implementation of the COVID-19 passport, the reasons for it and the aspects that generate the most interest to study possible ways of improvement. In line with this objective, the research questions are as follows:

- RQ1: Has the COVID-19 passport been perceived as a measure of success or failure among users?

- RQ2: What are the main issues of concern or most frequently mentioned in the comments?

In order to answer these questions, this work has used a novel methodology based on NLP. Social media has become an important means of communication for society and an important source of easily accessible data for opinions and emotions, which in situations of major humanitarian disasters can be beneficial for the public sector (Malawani et al., 2020).

To this end, Rapidminer software is used to analyse comments on YouTube videos related to the COVID-19 passport.

Natural Language Processing (NLP) encompasses techniques such as Sentiment Analysis, which allow machines to process human language (López-Martínez and Sierra, 2020). Sentiment Analysis evaluates text content by assigning a numerical polarity based on the connotations of words (Saura et al., 2018), either by machine learning or by lexicon-based sentiment analysis (Kolchyna et al., 2015).

Methodology

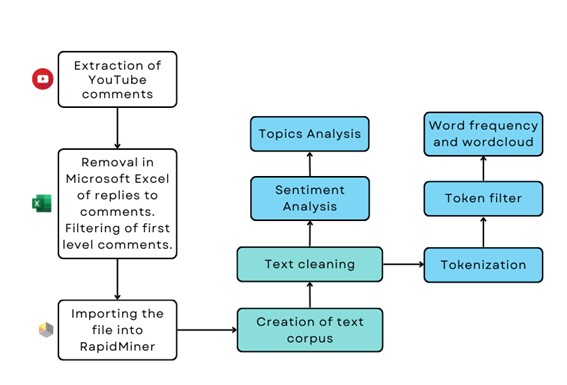

Figure 1. Methodological process

- Extracting comments: A total of 24,182 YouTube comments were extracted from videos of news related to the COVID-19 passport on British press media channels: BBC, The Sun and Daily Mail. Following the recommendations of Palos et al. (2022), the videos were selected according to subject matter, popularity, number of comments and language. The final set consists of 11 videos with more than 250 comments each. The extraction was carried out with MAXQDA Analytics Pro 2022 software.

- Filtering of top-level comments: Some of the comments extracted were responses to first levelcomments, so to avoid the bias they were removed in Microsoft Excel, leaving only the first level comments (10,833).

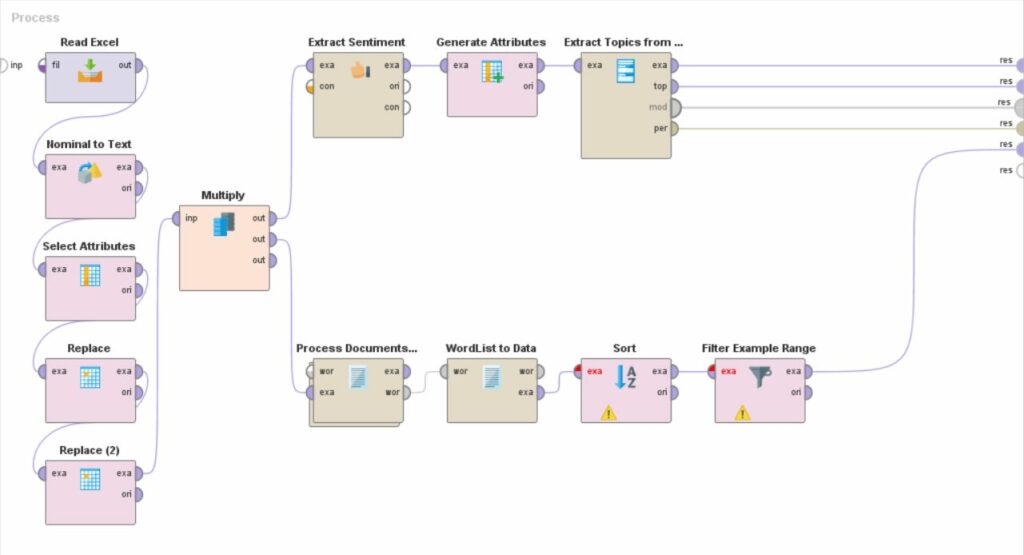

Analysis with RapidMiner: The operators included in the RapidMiner design interface and their usefulness within the process shown in the following image are detailed below:

Figure 2. RapidMiner process

| Operator | Utility |

| Read Microsoft Excel | It imports the Microsoft Excel file containing the comments. |

| Nominal to Text | It converts nominal attributes to string attributes |

| Select Attributes | It selects the column in .xlsx file to be considered for the analysis (column of comments text) |

| Replace | It removes unwanted characters to purify text: https?://[-a-zA- Z0-9+&@#/%?=~_(!:,;]*[-a-zA-Z0-9+&@#/%?=~_()]RT\s*@[^:]*:\s*[A-Za-z]+ |

| Multiply | It divides the process into two parts, each with its corresponding operators: Sentiment analysis and topic classification (Extract sentiment, Generate Attribute, Extract Topics from Data).Word Cloud Generation (Process Document from Data, Word List to Data, Sort, Filter Example Range). |

| Extract sentiment | It analyses the sentiment expressed in each of the comments using the model indicated in parameters (aylien, sentiwordnet, vader, etc.). Vader has been selected. It detects the relevant words included in each comment and assigns them a score previously established in the dictionary. It performs a global computation of all the words included in the same comment, from which the polarity is obtained. |

| Generate attributes | A conditional formula translates polarity into the expressions “positive”, “negative” and “neutral”. |

| Extract Topics from Data (LDA) | It groups the comments into several topics according to their content and determines the keywords of the topic according toimportance. |

| Process Document from Data | It contains the following operators: tokenise: splits each comment into a sequence of independent words (tokens).Transform cases: converts all tokens to lower case. Filter Stopwords: removes articles, pronouns, etc.Filter tokens by length: filter words by length (4-25 characters). |

| WordList to Data | It counts the occurrences of each word. |

| Sort | It sorts the words according to the number of occurrences. |

| Filter Example Range | It filters the 20 most frequent words. |

Results

The results are term frequency ranking, topics ranking and sentiment analysis.

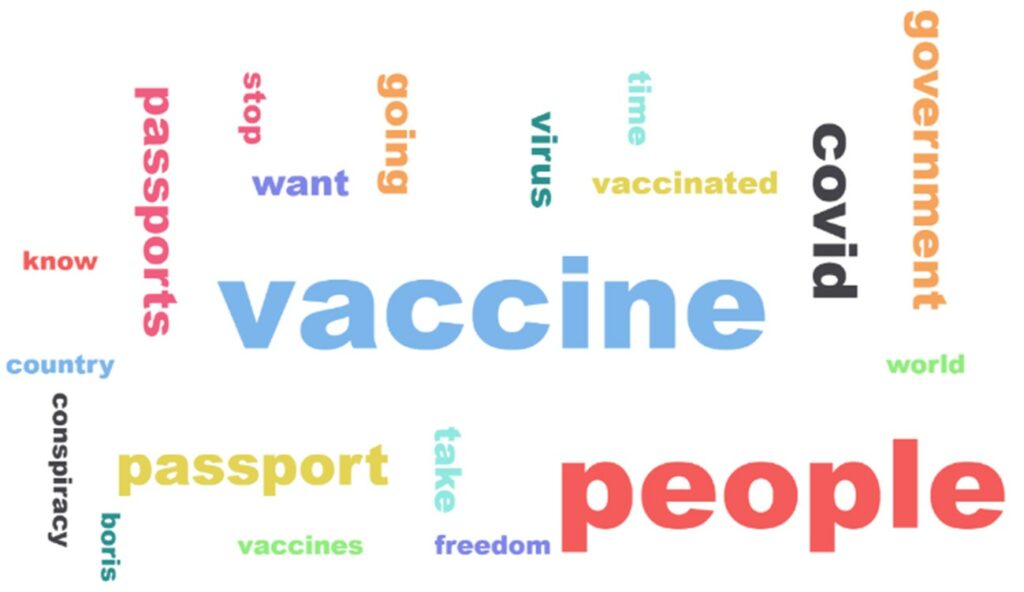

- Frequency of terms

Words such as “government”, with a total of 734 appearances; “stop”; “freedom”; “conspiracy”; “boris”;”time”; “country”, which refer to ethical issues such as freedom, already mentioned in the bibliographic review as one of the most contradictory aspects, as well as the differences between”country” countries. Also the need to “buy time” to “stop” the virus, seen in a positive sense, or “stop COVID-19 Passport” in a detrimental sense, which also makes sense when including words related to politics and its leaders in the UK, government and conspiracy theories.

| No. | Word | Documents | Total |

| 1 | vaccine | 1678 | 2247 |

| 2 | people | 1640 | 2234 |

| 3 | covid | 813 | 1084 |

| 4 | passport | 776 | 894 |

| 5 | passports | 664 | 746 |

| 6 | government | 647 | 734 |

| 7 | go | 558 | 633 |

| 8 | take | 524 | 597 |

| 9 | want | 485 | 550 |

| 10 | virus | 482 | 639 |

| 11 | vaccinated | 449 | 624 |

| 12 | stop | 428 | 489 |

| 13 | time | 423 | 458 |

| 14 | world | 392 | 462 |

| 15 | freedom | 369 | 434 |

| 16 | conspiracy | 358 | 404 |

| 17 | vaccines | 355 | 456 |

| 18 | country | 347 | 378 |

| 19 | boris | 346 | 377 |

| 20 | visit | 343 | 392 |

Figure 3. Word cloud

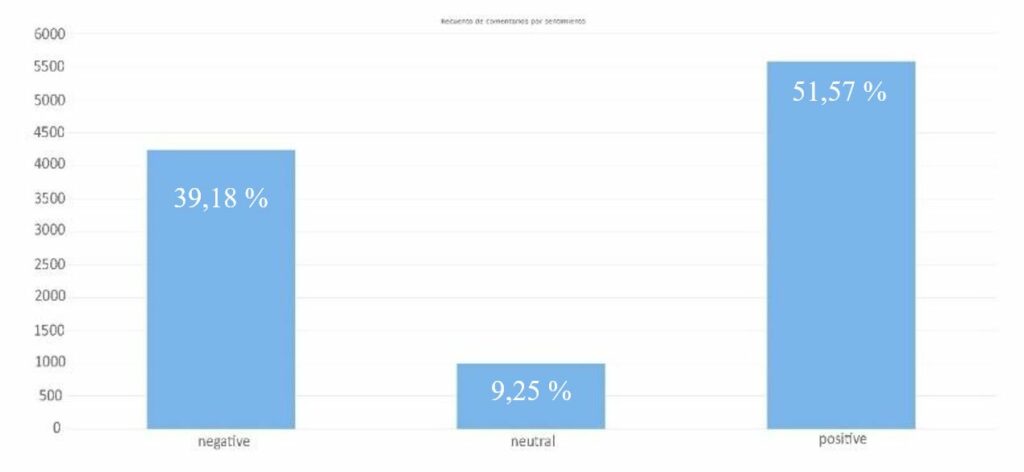

- 2. Sentiment analysis

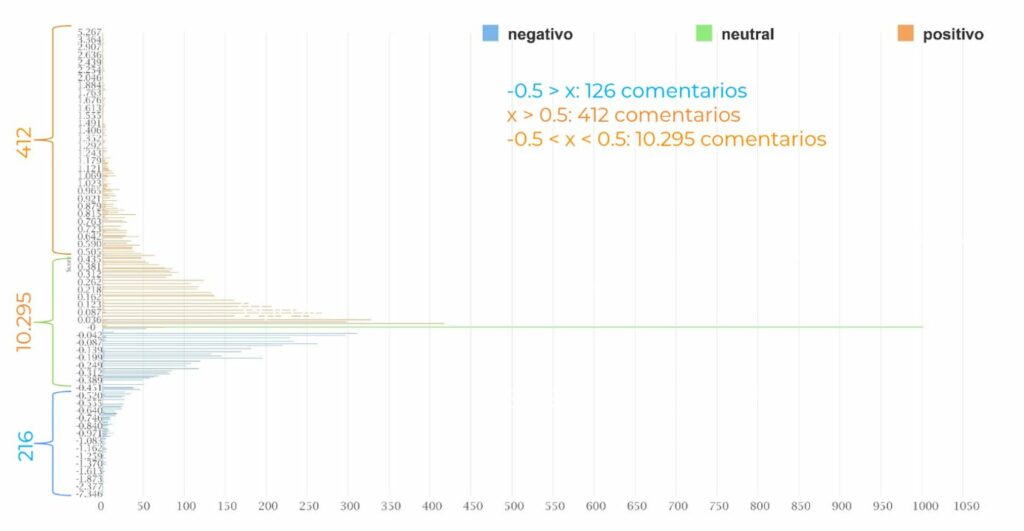

The sentiment analysis statistics show a majority of positive comments. The following graph shows the count of comments after sentiment analysis: 51.57% positive, 39.18% negative, 9.25% neutral.

Graph 1. Comment count by sentiment

However, this information is complemented by the fact that only 126 comments have a score lower than -0.5 and 412 have a score higher than 0.5. The rest of the comments are between – 0.5 and 0.5. As can be seen below in the next graph, the majority of comments are in values tending towards neutrality, either towards the positive side or towards the negative side, forming a Gaussian bell. It is necessary to remember that only the following are considered to be neutral scored with anexact 0. The rest are positive or negative whether they have a greater or lesser burden of such a feeling.

Graph 2. Distribution of comments according to their polarity

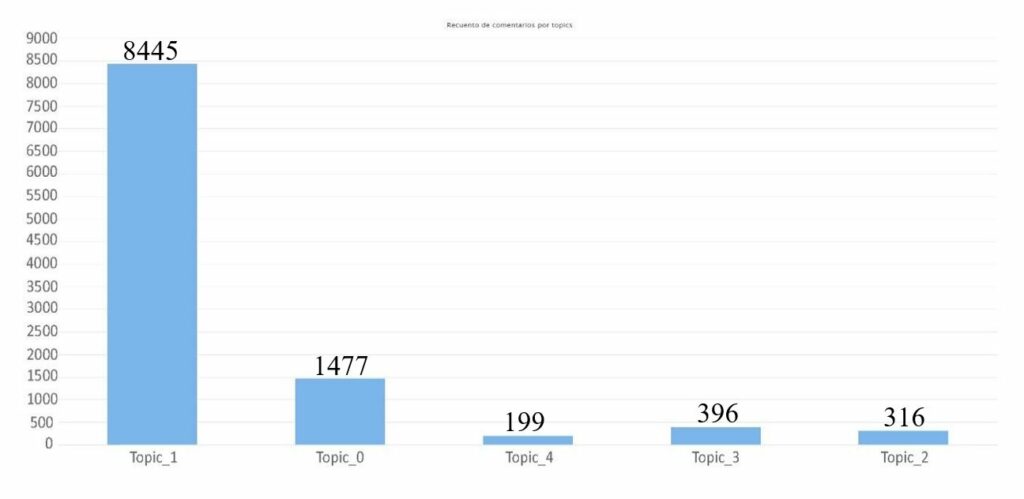

- 3. Classification according to topics

The comments have been automatically grouped according to their content into different themes using the Latent Dirichlet Allocation (LDA) model. The result obtained is a classification into 5 groups of all the comments analysed, as well as a ranking of the most frequent terms in each of these 5 topics.

| Topic 0 | Topic 1 | Topic 2 | Topic 3 | Topic 4 | |

| Name | Conspiracy theory | General views on obtaining thepassport | Health issues | Citizens’ rights | Concerns about citizensecurity |

| Frequently used terms | Conspiracy, theory, Boris (Prime Minister of the UK), rights | person, vaccinations, passport, want, need, government | immunity, deaths, vaccines, effects, experimental,cases, health, health, immunity,deaths | Freedom, government, control, stop | Crime, offender, punishment, news, news |

| Likes | 8030 | 39534 | 1689 | 1449 | 694 |

| Comments | 1477 | 8445 | 316 | 396 | 199 |

| Likes/com ments | 5,43 | 4,68 | 5,34 | 3,65 | 3,48 |

Table 3. Classification by topics

Graph 3. Comment count per topic

Conclusion, managerial implications and limitations

In conclusion, the methodology used to analyze comments on the COVID-19 passport has limitations. The polarity of sentiment in the comments may differ from the sentiment towards the passport, and ironic comments can cause prediction errors. Despite these limitations, the interpreted conclusions from the results indicate numerous criticisms both for and against the passport. Supporters argue for the need to resume daily life and revive the economy, while exercising caution against the virus. Opponents express concerns about the vaccine’s efficacy. The most concerning issues include adverse vaccine side effects, unequal vaccine availability across countries (impacting the usefulness of the passport in a globalized world), and governmental management of the crisis. Future research should involve a more comprehensive analysis of identified criticisms and concerns, examining these aspects across different demographic groups using algorithms for gender, age, and location detection in social networks, which is an emerging field in artificial intelligence.

Learning from this pandemic, it is crucial to manage it globally to ensure global security. The lack of protection affects not only poor communities, but the entire planet. A clear and transparent policy is needed to address data privacy concerns and combat misinformation on social media, avoiding uncertainty among the population.

References

Shin, H., Kang, J., Sharma, A., & Nicolau, J. L. (2021). The Impact of COVID-19 VaccinePassport on Air Travelers’ Booking Decision and Companies’ Financial Value: Https://Doi.Org/10.1177/10963480211058475, XX, No. X, 1–10. https://doi.org/10.1177/10963480211058475

Sharun, K., Tiwari, R., Dhama, K., Rabaan, A. A., & Alhumaid, S. (2021). COVID- 19 vaccination passport: prospects, scientific feasibility, and ethical concerns. Https://Doi.Org/10.1080/21645515.2021.1953350, 17(11), 4108–4111. https://doi.org/10.1080/21645515.2021.1953350

Saura, J. R., Reyes-Menendez, A., & Alvarez-Alonso, C. (2018). Do Online Comments Affect Environmental Management? Identifying Factors Related to Environmental Management and Sustainability of Hotels. Sustainability, 10(9). https://doi.org/10.3390/su10093016

Malawani, A. D., Nurmandi, A., Purnomo, E. P., & Rahman, T. (2020). Social media in aid ofpost disaster management. Transforming Government: People, Process and Policy, 14(2), 237–260. https://doi.org/10.1108/TG-09-2019- 0088/FULL/PDF

Lopez-Martinez, R., & Sierra, G. (2020). Research Trends in the International Literature on Natural Language Processing, 2000-2019 — A Bibliometric Study. Journalof Scientometric Research, 9, 310–318. https://doi.org/10.5530/jscires.9.3.38

Kolchyna, O., Souza, T. T. P., Treleaven, P., & Aste, T. (2015). Twitter Sentiment Analysis: Lexicon Method, Machine Learning Method and Their Combination. https://doi.org/10.48550/arxiv.1507.00955

Khan, M. L., Malik, A., Ruhi, U., & Al-Busaidi, A. (2022). Conflicting attitudes: Analyzing social media data to understand the early discourse on COVID-19 passports. Technology in Society, 68, 101830. https://doi.org/10.1016/J.TECHSOC.2021.101830

GSTREIN, O. J. (2021). The EU Digital COVID Certificate: A Preliminary Data ProtectionImpact Assessment. European Journal of Risk Regulation, 12(2), 370–381. https://doi.org/10.1017/err.2021.29

Fargnoli, V., Nehme, M., Guessous, I., & Burton-Jeangros, C. (2021). Acceptability ofCOVID-19 Certificates: A Qualitative Study in Geneva, Switzerland, in 2020. Frontiersin Public Health, 9, 682365. https://doi.org/10.3389/FPUBH.2021.682365/FULL

Artificial intelligence in international business research: a systematic literature review

Koskinen, J.

johanna.koskinen@haaga-helia.fi

Haaga-Helia University of Applied Sciences & Turku School of Economics, Finland

Keywords: artificial intelligence, AI, international business, international business research, systematic literature review

Introduction

Artificial intelligence (AI) can be described as “a system’s ability to correctly interpret external data, learn from that data, and use what it has learned to achieve specific goals and tasks through flexible adaptation” (Kaplan & Haenlein, 2019, p. 17). One of its main benefits is that it can automate a significant amount of not only mechanical tasks, but also cognitive ones (Von Krogh, 2018). Moreover, the new type of AI, “generative AI”, such as ChatGPT, is specifically designed to create new content. Experts, such as Bill Gates (2023), are comparing AI’s impact on business to the internet, which has had a transformative impact on international business, particularly on newer types of enterprises like International New Ventures (INVs) and Born Globals (Etemad, 2022). Given that AI is already in many parts of our lives, such as smartphones, it is not only important but also critical to understand its role in international business (IB).

My Ph.D. research topic is how AI impacts the internationalization of SMEs at the level of entrepreneurial ecosystems. I am aiming to contribute specifically to IB research. In order to dive deeper into the topic and to advance its theoretical analysis, I conducted a systematic literature review on current AI research in IB. Therefore, my research question is: What is the current state of knowledge regarding AI within IB research?

Methods

I conducted a systematic literature review adapting the method presented by Kaartemo and Helkkula (2018) by searching and assessing literature, and analyzing and synthesizing the findings. The stages of my research are presented below.

Stage 1: I consulted an IB journal ranking article (Tüselmann et al., 2016) and I used the SCImago Journal Rank (SJR) indicator to assess the quality and impact of IB journals. I chose to focus specifically on the leading IB journals because they attract researchers to publish their highest quality research.

Stage 2: I searched for articles in the Scopus database with the main keywords related to artificial intelligence in the title, abstract or keywords: artificial intelligence, AI, machine learning, deep learning, neural network, large language models, robots, robotics, natural language processing. I assessed the results and excluded any articles, which mentioned only one part of a keyword, e.g. learning. I reviewed the full papers to determine whether the article was appropriate for this research. This resulted in 18 papers altogether: 6 theoretical or conceptual, 4 review, 6 empirical quantitative and 2 empirical qualitative papers.

The chosen journals are listed below with the time period covered and amount of publications found in brackets: The Journal of International Business Studies (1975-8/2023, 4), The Journal of World Business (1997-8/2023, 4), Global Strategy Journal (2014-8/2023, 2),

Management and organization review (2005-8/2023, 0), International Business Review (1993-8/2023, 3), Management international review (2005-8/2023, 0), Journal of international management (1998-2023, 3), Thunderbird IB Review (2005-2023, 3), Critical Perspectives on International Business (2005-2023, 0), Transnational Corporations (2005-2023, 0) and Multinational Business Review (2003-2023,1).

Stage 3: I analyzed the articles by documenting all relevant data in an excel, including journal and author names, year, purpose, method and main findings. I categorized them into three categories, which emerged from the data. The first two ones being the most relevant in terms of my PhD research.

Results and findings

I grouped the articles within this systematic literature review (18) into three categories representing how AI is discussed in IB literature. Two articles were categorized into multiple categories. The main findings are presented below.

Category 1: Impact of advanced technologies on the firm (8 papers)

The first stream of studies are focused mainly on advanced techno logies as a whole, which AI is part of, and discusses the implications of it for IB at the firm-level as no studies solely focused on AI’s impact were available. These are from a short period of time (2017-2023) indicating its novelty. They mainly emphasize the positive impacts of advanced technologies bringing attention to how they may:

- reduce transaction costs (Strange & Zucchella, 2017; Ahi et al., 2022)

- facilitate international division and management of labor (Strange & Zucchella, A., 2017; Ahi et al., 2022)

- improve productivity, and change the nature of work and how it is structured (Ahi et al., 2022; Brakman et al., 2021) for example via automation and robotization of work (Brakman et al., 2021)

- enhance decision-making (Strange & Zucchella, 2017; Ahi et al., 2022)

- simplify exchange of knowledge (Ahi et al., 2022)

- increase trust in business networks (Ahi et al., 2022)

- impact where to locate manufacturing activities (Strange & Zucchella, 2017)

- enable supply chains to become more resilient and flexible (Gupta & Jauhar, 2023) Furthermore, it became evident that their application specifically in managing global talent (Malik et al., 2021; Brakman et al., 2021) and supply chains (Strange & Zucchella, 2017; Ahi et al., 2022; Gupta & Jauhar, 2023) has received significant academic attention.

Even though, many raise awareness to the negative impacts of advanced technologies such as cybersecurity risks and ethical concerns around data privacy (Strange, R. & Zucchella, A., 2017; Ahi et al., 2022; Benito et al., 2022), and long-term impact of AI on human development (Ahi et al., 2022), they identify gaps in current research on the negative aspects and encourage IB scholars to research them more (Ahi et al., 2022; Benito et al., 2022). Also, Ahi et al. (2022) emphasize the need to research the negative impacts on work, for example ethical issues, widening income inequality and unemployment.

Category 2: Impact of AI on the business ecosystem (6 papers)

The second stream of studies focused on the potential implications of AI on the business ecosystem.There is no discussion in the reviewed articles only specifically on this topic, but they do bring out some important aspects in relation to this topic area. These are recent articles (2020-2022) demonstrating the topic’s novelty and need for further research.

Not only is the ecosystem important in international scaling of digital solutions, such as AI (Brakmanet al., 2021), but also AI is seen to have importance for the ecosystem. AI is seen to support companies to adapt to their business environments by supporting to tackle grand challenges such as environmental sustainability (Ahi et al., 2022), by being a vehicle for enhancing knowledge spillovers within the ecosystem (Cetindamar et al., 2020), and supporting managers to identify and analyze political risks (Hemphill & Kelley, 2021), while being seen as a grand challenge itself (Benito, 2022). Moreover, it’s also important for governments to support different actors in the ecosystem in getting more productivity from existing systems and, thus improving current IT tools, because this focus on algorithm development may have impact on a country’s competitive advantage, such as is the case in the U.S. being the largest contributor to algorithm development (Thompson et al., 2021).

Category 3: AI as a tool for conducting IB research (8 papers)

The third stream of studies is focused on both describing the current state of methodological advances in IB research through the use of AI and employing it. This seems to be a topic of wide interest from alonger time period (2007-2023). Referring to the use of AI, these studies not only highlight the usefulness of AI in conducting primarily quantitative IB research (Lindner et al., 2022; Delios et al., 2023; Veiga et al., 2023), but also emphasize that by leveraging these new methods — making use of the deeper and increased amount of data available — is crucial for IB research to remain a relevant field of research in the future as well (Delios et al., 2023).

The majority of studies employ AI in their research (Nair et al., 2007; Brouthers, 2009; Garbe, 2009; Messner, 2022; Vuorio & Torkkeli, 2023). These studies utilize AI in researching collaborative venture formation (Nair et al., 2007), international market selection (Brouthers, 2009), international organizational structure (Garbe, 2009), cultural heterogeneity (Messner, 2022) and predicting early internationalization (Vuorio & Torkkeli, 2023).

Conclusions

This systematic literature review explored the current state of research on AI in IB. The method was adapted from Kaartemo and Helkkula (2018) and this study analyzed a collection of 18 relevant articles and categorized them into three categories. The main contribution of this research is that it demonstrates that there is a lack of high quality research on specifically AI in the IB research field despite the current “hype” around AI. From a temporal perspective, it seems that this is a novel area of research because the majority of discussions have emerged only recently in IB. Despite the low number of publications, the three categories portray well the current state of knowledge.

The first category, “Impact of advanced technologies on the firm,” discussed the impacts of AI within the broader context of advanced technologies. Despite the topic’s novelty, the studies demonstrated the potential positive impacts of AI adoption, including reductions in transaction costs (Strange & Zucchella, 2017; Ahi et al., 2022), improvements in decision- making processes (Strange & Zucchella, 2017; Ahi et al., 2022), and the transformation of work structures (Ahi et al., 2022; Brakman et al., 2021). Also, it became apparent the majority of research focuses on the positive sides of AI for business and less emphasis is put on the negative aspects (Ahi et al., 2022; Benito et al., 2022), which calls for more research on this topic area.

The second category, “Impact of AI on the business ecosystem,” focused on the impact of AI on the business ecosystem. While the reviewed articles did not solely focus on this topic, they highlighted the significance of AI in facilitating adaptation (Ahi et al., 2022), knowledge sharing (Cetindamar et al., 2020), and identifying political risks (Hemphill & Kelley, 2021) within business environments. Despite the scarcity of research on this topic, the publications do bring insights into how AI is also impacting the business ecosystem.

The third and final category, “AI as a tool for conducting IB research”, focused on either the current state of AI utilization in research or employed it themselves in IB research. It brought attention to AI being useful in IB research (Lindner et al., 2022; Delios et al., 2023; Veiga et al., 2023) and many examples of its use were found, which focused on researching for example collaborative venture formation (Nair et al., 2007), and predicting early internationalization (Vuorio & Torkkeli, 2023).

Future research

The majority of articles focus on the impact of AI on the firm or AI as a tool in quantitative research. Less emphasis was paid to AI’s impact at the ecosystem level, which I intend to research further in my PhD. Also, the majority of research focuses only on the positive sides of AI for business and less emphasis is put on the negative aspects (Ahi et al., 2022; Benito et al., 2022). AI in qualitative research could also be researched more. Overall, it was evident that it is important to develop IB research both by researching the impact of AI on IB, which Ahi et al. (2022) bring out as well, and how AI may be utilized in IB research even further.

Based on this review I suggest the following broader topic areas for future research:

- What are the negative impacts of AI at different levels (individual, business, environment)?

- How does AI impact the internationalization of businesses at the ecosystem level?

- How can AI be employed to enhance qualitative IB research?

Limitations

The main limitation concerned the specificity of AI terminology. Much of the existing IB research seems to focus on advanced technologies on a general level rather than focusing only on AI, a point also raised by Ahi et al. (2022). This brought about the challenge of needing to use more general level technology terminology in order to find articles, but at the same time questioning their relevance for this AI-centric research.

References

Ahi, A. A., Sinkovics, N., Shildibekov, Y., Sinkovics, R. R., & Mehandjiev, N. (2022). Advanced technologies and international business: A multidisciplinary analysis of the literature. International Business Review, 31(4), 101967.

Benito, G. R., Cuervo‐Cazurra, A., Mudambi, R., Pedersen, T., & Tallman, S. (2022). The future of global strategy. Global Strategy Journal, 12(3), 421-450.

Bill Gates (2023), The Age of AI has begun, The blog of Bill Gates, Retrieved from https://www.gatesnotes.com/The-Age-of-AI-Has-Begun on 10.8.2023.

Brakman, S., Garretsen, H., & van Witteloostuijn, A. (2021). Robots do not get the coronavirus: TheCOVID-19 pandemic and the international division of labor. Journal of International Business Studies, 52, 1215-1224.

Brouthers, L. E., Mukhopadhyay, S., Wilkinson, T. J., & Brouthers, K. D. (2009). International marketselection and subsidiary performance: A neural network approach. Journal of World Business, 44(3), 262-273.

Cetindamar, D., Lammers, T., & Zhang, Y. (2020). Exploring the knowledge spillovers of a technology in an entrepreneurial ecosystem—The case of artificial intelligence in Sydney.

Thunderbird International Business Review, 62(5), 457-474.

Delios, A., Welch, C., Nielsen, B., Aguinis, H., & Brewster, C. (2023). Reconsidering, refashioning,and reconceptualizing research methodology in international business. Journal of World Business, 58(6), 101488.

Etemad, H. (2022). The emergence of international small digital ventures (ISDVs): Reaching beyond Born Globals and INVs. Journal of International Entrepreneurship, 20(1), 1-28.

Garbe, J. N., & Richter, N. F. (2009). Causal analysis of the internationalization and performance relationship based on neural networks—advocating the transnational structure. Journal of international management, 15(4), 413-431.

Gupta, M., & Jauhar, S. K. (2023). Digital innovation: An essence for Industry 4.0.

Thunderbird International Business Review.

Hemphill, T. A., & Kelley, K. J. (2021). Artificial intelligence and the fifth phase of political risk management: An application to regulatory expropriation. Thunderbird International Business Review, 63(5), 585-595.

Kaartemo, V., & Helkkula, A. (2018). A Systematic Review of Artificial Intelligence and Robots in Value. Co-creation: Current Status and Future Research Avenues. Journal of Creating Value, 4(2), 211–228.

Kaplan, A., & Haenlein, M. (2019). Siri, Siri, in my hand: Who’s the fairest in the land? On theinterpretations, illustrations, and implications of artificial intelligence. Business horizons, 62(1), 15-25.

Lindner, T., Puck, J., & Verbeke, A. (2022). Beyond addressing multicollinearity: Robust quantitative analysis and machine learning in international business research. Journal of International Business Studies, 53(7), 1307-1314.

Malik, A., De Silva, M. T., Budhwar, P., & Srikanth, N. R. (2021). Elevating talents’ experience through innovative artificial intelligence-mediated knowledge sharing: Evidence from an IT-multinational enterprise. Journal of International Management, 27(4), 100871.

Messner, W. (2022). Advancing our understanding of cultural heterogeneity with unsupervised machine learning. Journal of International Management, 28(2), 100885.

Nair, A., Hanvanich, S., & Cavusgil, S. T. (2007). An exploration of the patterns underlying relatedand unrelated collaborative ventures using neural network: Empirical investigation of collaborative venture formation data spanning 1985–2001. International Business Review, 16(6), 659-686.

Strange, R., & Zucchella, A. (2017). Industry 4.0, global value chains and international business. Multinational Business Review, 25(3), 174-184.

Tatarinov, K., Ambos, T. C., & Tschang, F. T. (2023). Scaling digital solutions for wicked problems:Ecosystem versatility. Journal of International Business Studies, 54(4), 631-656.

Thompson, N. C., Ge, S., & Sherry, Y. M. (2021). Building the algorithm commons: Who discoveredthe algorithms that underpin computing in the modern enterprise?. Global Strategy Journal, 11(1), 17-33.

Tüselmann, H., Sinkovics, R. R., & Pishchulov, G. (2016). Revisiting the standing of internationalbusiness journals in the competitive landscape. Journal of World Business, 51(4), 487-498.

Veiga, J. F., Lubatkin, M., Calori, R., Very, P., & Tung, Y. A. (2000). Using neural network analysis to uncover the trace effects of national culture. Journal of International Business Studies, 31, 223-238.

Von Krogh, G. (2018). Artificial intelligence in organizations: New opportunities for phenomenon-based theorizing. Academy of Management Discoveries.

Vuorio, A., & Torkkeli, L. (2023). Dynamic managerial capability portfolios in early internationalising firms. International Business Review, 32(1), 102049.

Cognitive Cybersecurity: An approach applied to Phishing

José Mariano Velo

josvelmor2@alum.us.es

Ángel Jesús Varela-Vaca

ajvarela@us.es

Rafael M. Gasca

gasca@us.es

IDEA Research Group, Universidad de Sevilla, Spain

Keywords: Phishing, Cognitive Computing, Deep Learning, AI, Machine Learning

Abstract

Cognitive systems are computer systems that use the theories, methods, and tools of various computing disciplines to model cognitive tasks or processes emulating human cognition. One of the applications of these systems in Cybersecurity is the detection of phishing emails. Thousands or millions of emails are received daily, all processed by the different security filters to catalogue them in several classes as legitimate emails, spam emails and malware or phishing emails. Despite all the measures taken, there is always a percentage of malicious emails which, for various reasons, can pass these filters. Emails that, ironically, could be easily identified by security analysts if they could review them individually. For this reason, we will define a proposal to improve the detection of such attacks based on a cognitive approach. The proposal focuses on defining the process, potential techniques and metrics using engines for extended decision-making based on decision models.

Introduction & Background

Cognitive Computing (Raghavan, 2016) is an emerging field product of the confluence of several “sciences” such as cognitive science, neuroscience, data science and other computer technologies. The union of those sciences and new computing technologies such as Artificial Intelligence (AI), Big Data, Machine Learning (ML), Cloud Computing and others should make it possible to model the process of human cognition artificially. Computing cognition includes in its processes the modelling of cognition to provide a better understanding of the environment systems that analyse large amounts of data and thus to be able to generate results and predictions that improve decision making.

Cognitive systems are computer systems that employ the theories, methods, and tools of various computing disciplines to model tasks or processes cognitions emulating human cognition (Raghavan, 2016) in them. The human brain is seen as a highly parallelised information processor from the point of view of cognitive computing (Raghavan, 2016). These systems are different from traditional systems since they are adaptive and learn and evolve over time, incorporating the context in computing. They can “feel” the environment, think and act independently and especially they can deal with uncertain, ambiguous, and incomplete information, as well as retain in their memory important data for future use.

E-mail Security Systems Analyses are performed basically by checking blacklisted domains and so on, and they usually work well with global and indiscriminate phishing attacks campaigns since intelligence data associated with them are usually shared from first detection and incorporated into the mentioned reputation repositories for further use and query by other systems. However, those systems usually fail when they parse targeted phishing emails, meticulously prepared to bypass the filters and come from generally “clean” domains.

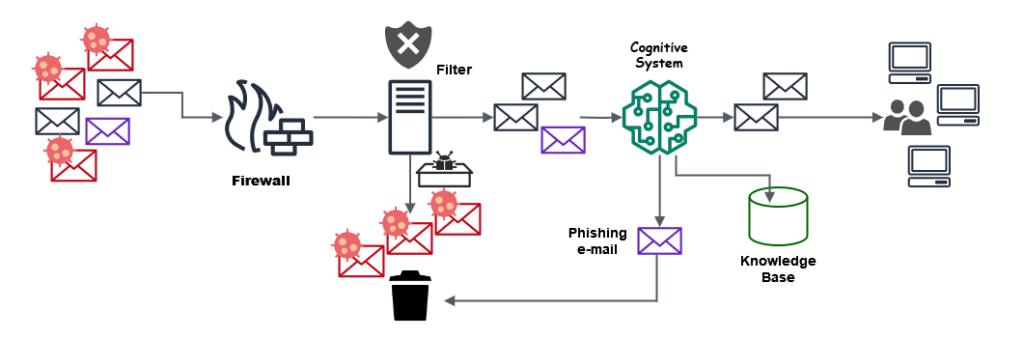

Figure 1. Incoming e-mail flow.

Therefore, we propose a cognitive system that will allow us to improve the analysis performed by the detection system of legitimate or malicious mail and that, if necessary, leave in the hands of the human analyst the last decision. This is, we intend to improve the analysis of emails with elaborate phishing, and for this, we will do it from an approximation based on a cognitive system. Some authors, like (Sumari, 2017), call CAI (Cognitive Artificial Intelligence) this approximation type. Figure 1 shows a scheme of the cognitive system that we propose within the flow of incoming emails in a standard organisation, being able to be observed as a mail with spear phishing (represented in purple colour) that has been able to bypass the initial filter, it is finally discarded after being analysed by the proposed system. Contributions main aspects of this work are:

- Define the complete cognitive process for the case of elaborate phishing.

- Study the metrics and parameters for the processing phase. of information.

- Define new metrics and parameters to analyse emails.

- Proposal for a decision-making system based on decision models.

Cognitive System Proposal for Cybersecurity

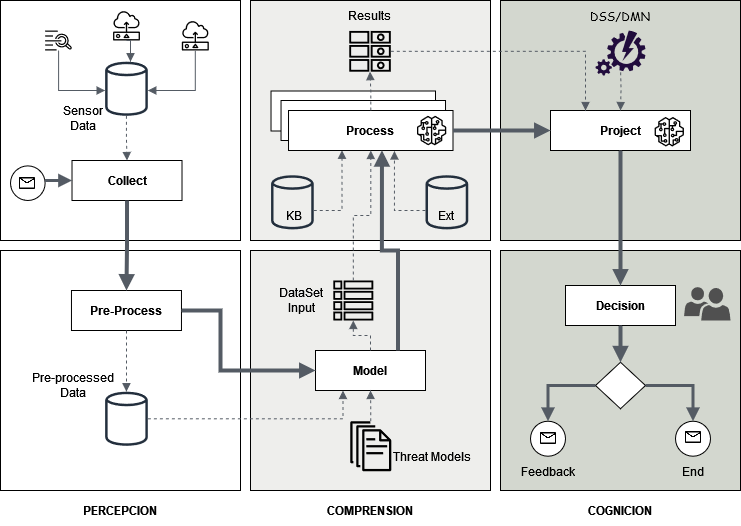

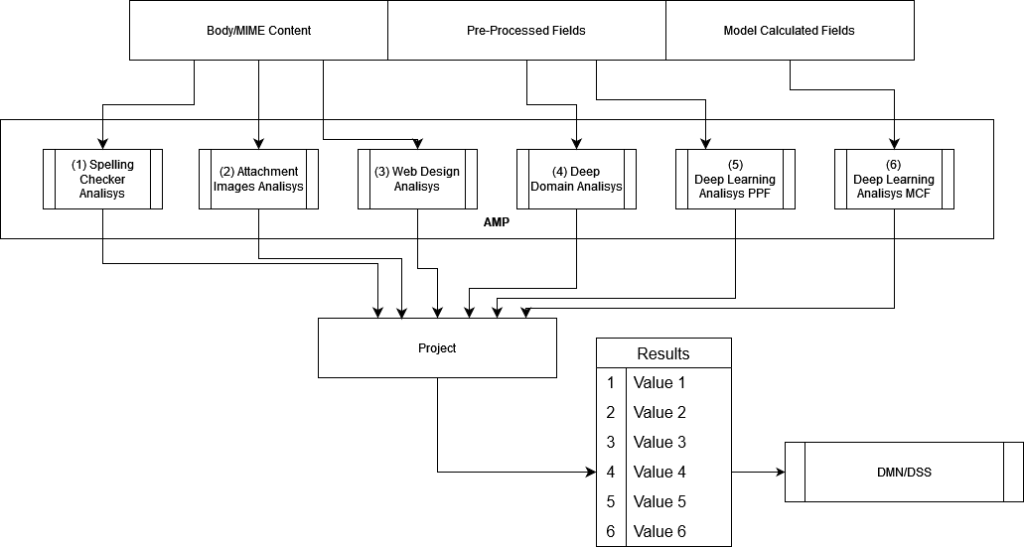

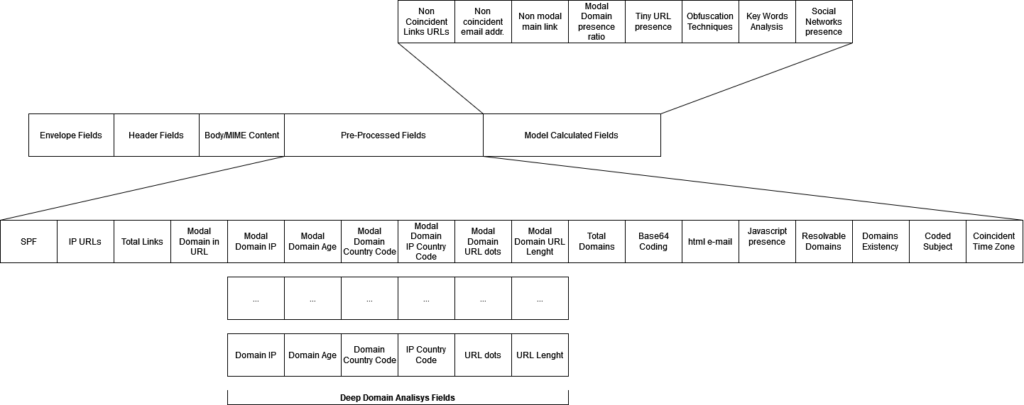

Our proposed cognitive system, as can be seen in Figure 2, consists of three fundamental parts or phases divided each of them, into two sub-phases and all of them are related to the modelling of the cognitive process. Details and different phases will be explained below.

Figure 2. Proposed cognitive model.

Perception

Corresponds to the data collection phase and their preparation. In this phase, the system “observes” the environment and data is extracted and prepared for its subsequent analysis in the next phase by means of pre-processing. This phase would represent the equivalent of using the different senses in the human being as an initial part of cognition. The phase has two sub-phases. The first is called “Collect”, and the second called “Pre-process”. The first one, “Collect”, is the sub-phase in which we extract the data from the different sensors or sources of raw environmental data but in a controlled manner. As we can see in Figure 2, there are several input sources to a data warehouse where they are collected. Depending on how we apply the system, we can establish which types of data to extract from the environment. Or we can extract all available and then discriminate which are valid for a given task or process. The second of them, the “Pre-Process” sub-phase, is the one that processes the data entrance to clean, order and classify them to the next phase of the system. The mission of this second subphase is to eliminate what is useless and apart from generating new data based on calculations and simple queries made, so that we can send to the next phase a data set as large and accurate as possible.

Understanding

In this phase, the data acquired are processed according to the established parameters and the model defined for a certain threat or cybersecurity problem. It also has two sub-phases, as in the previous phase. The first is called “Model” and models and orders the entry of pre-processed data with the “Threat Model” that we want to apply. Here are carried out several calculations and more elaborate queries to present a discretised input dataset as complete as possible to the next sub-phase. The second sub-phase, which we call” Process”, is the one that processes that supplied input and can also incorporate the feedback data obtained in previous iterations, as well as data from external sources such as STIX, Yara rules and others that may be necessary to complement and enrich the chosen “Threat Model”. It should be noted that these data cannot be incorporated into the stage of perception, given that they provide a schema, ontology, or higher-level knowledge of the input data. This second sub-phase takes place in what we have called Multiple Parallel Analysis (AMP). For this reason, it is one of the most important of the whole process. Here, different techniques will be executed in parallel through multiple analyses to produce the various outputs in a block of results, such as AI/ML, NLP(Natural Languaje Processing), visual analysis and others, as seen in Figure 3. To make a comparison with human cognition, this is where the brain processes all the information it has obtained from the different senses, complementing it with level processes superior as language, intelligence, and others to understand what is happening in the environment.

Figure 3. AMP flowchart.

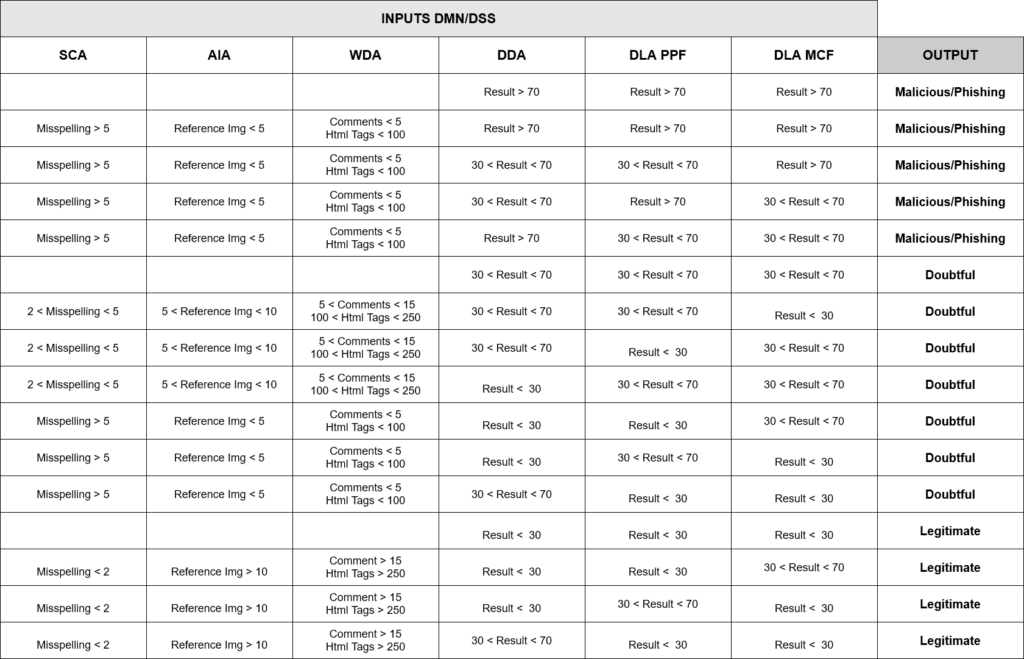

Cognition

In this last and definitive phase, the results obtained from the previous phase are initially submitted for decision based on a decision support system based on Decision Model Notation (DMN) to obtain the first answer. This response is the system’s decision about usability in terms of the analysis carried out in previous phases, and it’s a value indicating the probability that a given email is analysed, whether phishing or not. Depending on the percentage obtained is valued next in the sub-phase of decision for the possible values of the same, reaching even to request human intervention in the process in what is called HITL (Human in The Loop) (Wu, 2022). The decision end should provide feedback to the system for its learning and use in future cases.

The Phishing Problem – Cognitive approach

Our proposal focuses on analysing e-mails with spear phishing and elaborated phishing threats since they are emails that have managed to pass security controls corporate and can be identified by a human. This is the “gap” we want to cover, and for this reason, we propose our cognitive system. The analysed data and parameters we propose in each phase are, as shown in Figure 4:

Figure 4. Proposed dataset.

Perception

Based on MIME and S/MIME specs, we gather all possible information from envelopes, headers, and body fields of incoming e-mails. And send the following to be pre- processed: SPF, IP type URLs, Domains IP, Domain’s age, number of links, number of domains and sub-domains, URL dots, URL length, Modal domain country, domains/IP countries, Base64 encoding, html e-mails, resolvable domains to real IP, Javascript in the e-mail, UTF-8 coded e-mail subject and coincident time zone.

Understanding

This second phase, as we have seen previously, is where a data set is collected and prepared. It’s subdivided into two sub-phases, “Model” and “Process”. First, let’s add the following data to the model of data by calculating certain parameters, all aimed at providing a data set as accurately as possible to the engines of the following sub-stage. We propose to obtain, calculate, and incorporate into the set the following parameters: non-coincident URL Links, non-coincident e-mail addresses, non-modal main link, modal domain presence ratio, Tiny URL presence, obfuscation techniques, keywords analysis, social networks Links presence.

Cognition

In the last phase, the cognitive process culminates. For this reason, we have a first sub-phase, “Project”, where we are going to project all the results obtained in the previous sub-phase (Process) and will generate, through a decision-making system based on in DMN models as proposed by (Valencia-Parra, 2021), an output with the result of the entire process, expressed as a percentage of probability as a function of the weights assigned to the different decision model tables. DMN (Decision Model and Notation) is an OMG standard based on a description statement (“if-then”) of a decision model. Those weights are shown in Figure 5.

Figure 5. DSS/DMN Table.

Conclusions and future work

Cognitive systems should play an important role in the futureof security analysis, and therefore the so-called cognitive cybersecurity will establish a new paradigm. They will also provide support and help in making decisions for security analysts when detecting, assessing, and dealing with incidents that risk the assets of a certain company or organisation. Even though we are in an early stage of our work, we estimate that the proposal made can substantially improve the classification of incoming mail from a given organisation as it provides a system of behaviour like the human at the time scanning emails for phishing and other threats. The next step (in which we are working now) is to obtain a dataset that is as complete as possible to carry out an exhaustive study of the variables analysed and measure their suitability and relevance, as well as the applied model. It will also tune the parameters of the tables of the decision model used in the last phase. Likewise, incorporating other aspects to improve the model, such as the analysis of visual or mail quality, the use of NLP for analysis of urgent language in the body or subject of the email and the analysis of the visual resemblance of domains, among others.

References

V. V. Raghavan, V. N. Gudivada, V. Govindaraju, and C. R. Rao, Cognitive computing: Theory and applications. ISBN: 9780444637444. Elsevier, 2016.

A. D. W. Sumari and A. S. Ahmad, “The application of cognitive artificial intelligence within c4isr framework for national resilience” in 2017 Fourth Asian Conference on Defence Technology-Japan (ACDT). IEEE, 2017, pp. 1–8.

X. Wu, L. Xiao, Y. Sun, J. Zhang, T. Ma, and L. He, “A survey of human-in-the-loop for machine learning” Future Generation Computer Systems, 2022.

A. Valencia-Parra, L. Parody, A. J. Varela-Vaca, I. Caballero, and M. T. Gómez-López, “Dmn4dq:when data quality meets DMN,” Decision Support Systems, vol. 141, p. 113450, 2021.

Human-Computer Interaction in the Age of GenAI: Implications of Simulacra for Service Business

Tuomi, A.

Corresponding author – presenter

aarni.tuomi@haaga-helia.fi

Haaga-Helia University of Applied Sciences, Finland

Ascenção, M.P.

mariopassos.ascencao@haaga-helia.fi

Haaga-Helia University of Applied Sciences, Finland

Keywords: artificial intelligence, generative AI, service sector, simulacra, human-computer interaction

Background of the study

We are living in a time of rapid technological change, whereby new approaches to and applications of information communication technology (ICT) are transforming the way traditional business are operated. Most recently there has been a renaissance of sorts in one technology in particular, artificial intelligence (AI), whereby emerging AI tools are able to generate seemingly realistic-looking content, from images to virtual 3D environments. (Davenport & Mittal, 2022). The increase in technological capability, most notably generative AI (GenAI) to automatically generate high-quality content sees the traditional business management disciplines, including services marketing and management, enter what some scholars are calling the ‘era of falsity’ (Plangger & Campbell, 2022) and ‘post-truth’ (Keyes 2004).

First, it should be noted that the concept of ‘falsification’ is not new. As soon as new digital tools have emerged, businesses have tried to make the most of them (Bower, 1998).

Consumers have also been surprisingly quick to adapt to changes in technology, whereby for example when Photoshop – one of the most prominent photo-editing software stacks globally – was released in 1990, there was a real fear that it would lead to grave misuse and large amounts of false content, ultimately eroding people’s trust in visual images altogether (Leetaru, 2019). That did not happen, of course, and despite these fears, the concept of ‘photoshopping’ images and of an image being ‘photoshopped’ soon entered the common lingo. However, at the same time it should be noted that the increase in visual literacy does not automatically mean a decrease in the effectiveness of manipulated images to influence behaviour, whereby for example in their experimental study Lazard et al. (2020) demonstrated that even when participants knew an advertisement photo to be manipulated, they preferred it regardless over a non-manipulated photo.

French philosopher Baudrillard (1994) referred to such ‘falsified’ content as ‘simulacra’. For example, in the context of hospitality and tourism, much of the marketing material put out by brands is digitally manipulated, and the effectiveness of using e.g. F&B related images to boost sales has been known for long (Hou et al., 2017). Novel technologies capable of generating high-quality content represent a continuation to this tendency.

However, what academics are noting is that the degree of falsification is rapidly increasing, whereby content (e.g. code, text, images, video) generated by technology has increasingly started to pass as content generated by human software engineers, authors, photographers or animators. For example, Tuomi (2021) used AI to generate human-like restaurant reviews, demonstrating that several AI-generated reviews passed as human-written reviews when their authenticity was evaluated by human judges.

Aim of the study

To that end, this study aims to conceptually explore the types of human-technology interactions that emerge as a result of novel human-technology interaction, specifically the use of novel GenAI tools, paying particular attention to the context of service business. Specifically, the paper aims to address the following research question: What are the key implications (costs and benefits) of adopting generative AI applications for different service business stakeholders (businesses, workers, education)?

Methods

Adopting an integrative literature review approach, the study conceptually maps out parallels betweenadvances across three distinct fields of scholarship: service business, AI-human teams, and the concept of authenticity. Particularly the study draws on Baudrillard’s (1994) notion of simulacra.

In his seminal work, Baudrillard proposed that the postmodern society has replaced reality and meaning with symbols and signs, and that, in most situations, human experience is a simulation of reality. More specifically, Baudrillard argues there to be four layers of simulacra: first order simulacra, which are faithful copies of the original, and where the “judge” of authenticity can still discern the reality from the imitation; second order simulacra, which represent a perversion of reality and where the judge of authenticity believes the copy to be an improved, more desirable version of the reality; third order simulacra, which illustrates a pretence of reality where there is no clear distinction between reality and its representation, and in consequence, the copy replaces and improves upon the reality; and fourth order simulacra: pure simulation, whereby the connection to reality is fully severed. These are signs that simulate something that never existed in a form of hyperreality in which the copy has become truth.

According to Bukatman (1993, 2) “it has become increasingly difficult to separate the human from the technology”. Following Baudrillard, the study will conceptualise emerging forms of human-computer interaction (HCI) in the age of GenAI across the four different layers of simulacra. Doing so will significantly contribute to the current theoretical understanding of HCI, as well as provide service business practitioners, particularly strategic and operational decision-makers, insight into how best integrate GenAI into organisational strategy, service processes, and workflows (i.e. business design, service design, job design).

Results/Findings

The results of this conceptual study will help service business stakeholders to better understand the opportunities and challenges – that is, the costs and benefits – of adopting novel GenAI tools as part of day-to-day operations. To illustrate, practical examples of emerging digital content creation tools has recently skyrocketed. Codex automatically generates lines of code, DALL-E 2, Midjourney generate high-resolution images, ElevenLabs generates voice from existing samples, GPT-4 generates short- or long-form text, Autodraw is the “autocorrect” for digital drawing, Fontjoy generates font pairings, Namelix generates business names and logos, and Make-A-Video and D-ID generate animated videos from text prompts. In addition to new tools, generative AI capabilities are also increasingly being added to existing software. For example, Photoshop recently launched a feature called Generative Fill to its photo editing suite of technologies to enable new types of photo editing capabilities.

Novel digital content creation tools bring new affordances to stakeholders involved in value creation in service business, from workers to businesses and the educational sector.

For the worker, emerging technology offers new tools, enabling new forms of creativity to emerge in human-technology collaborative teams, e.g. in marketing or sales. The automation of more tasks changes workflows, whereby the importance of assessing when and how the human should be kept in-the-loop becomes imperative (Ivanov, 2021). New tools also bring the need for training, and the overall shift to more automated work processes poses questions around upskilling and re-skilling of staff (Tuomi et al., 2020). For service businesses, novel forms of human-technology interaction offer more ease of access, more control and the possibility to do more in-house. However, technology adoption becomes the likely bottleneck, whereby businesses will have to consider the costs and benefits of different emerging tools.

Which tools to use, which to ignore? How to use them the most effectively? Finally, for the educational sector, the indistinguishability of digitally generated content from human- generated material presents a double-edged sword. On one hand, new tools mean an abundance of higher quality content, but on the other, an authenticity crisis might follow.

How to assess content created through human-technology collaboration? How to deal with plagiarism and intellectual property in the context of AI-generated content?

Conclusion

Overall, from a sociotechnical perspective, research on human-computer interaction (HCI) and collaboration have witnessed significant developments and prominent theories over the last decades. Recently, a key development has been the increasing integration of AI and machine learning into HCIsystems, most notably generative AI, enabling e.g. more adaptive and personalised user experiences. This has led to the emergence of new types of human- computer interactions, prompting a need to re-conceptualise HCI.

Besides Baudrillard’s (1994) simulacra, previous theories like Activity Theory and Distributed Cognition have played crucial roles in understanding collaboration in HCI. Activity Theory emphasises the social and contextual aspects of human-computer interaction, highlighting the interplay between users, tools, and the environment (Bonnie, 1995). Distributed Cognition, on the other hand, focuses on how cognitive processes are distributed across individuals, artefacts, and the environment, emphasising the importance of collaboration and shared understanding in achieving complex tasks (Hollan et al., 2000).

Additionally, theories such as Social Presence Theory have contributed to understanding communication and collaboration in computer-mediated environments. Social Presence Theory explores the degree to which technology can convey a sense of being present with others (Gunawardena, 1995).

Noting the development of previous theory on human-computer interaction, and drawing further inspiration from simulacrum, we argue that increasingly, Baudrillard’s (1994) notions of simulacra meet the everyday reality in human-technology interaction, prompted recently through the increase in capability of and access to powerful new GenAI systems. As consumption moves further to artificial and digital environments such as the metaverse, many consumers, workers, students, and managers will experience ever-increasing versions of the simulacra instead of ‘reality’. Conceptually and empirically exploring emerging forms of human-technology interaction in the context of service business and generative AI is thus important and timely. Future research should aim to adopt varied ontological, epistemological and methodological approaches. Particularly useful might be studies that effectively bridge the gap between theory and practice and demonstrate measurable effects in real-world environments, for example field experiments, multiple case studies or action research.

References

Baudrillard, J. (1994). Simulacra and simulation. Ann Arbor: University of Michigan Press.

Bonnie, N. (1995). Context and consciousness: activity theory and human-computer interaction. Cambridge, MA: MIT Press.

Brower, K. (1998). Photography in the age of falsification. The Atlantic, 5/1998.

Bukatman, S. (1993). Terminal identity. The virtual subject in postmodern science fiction. London: Duke University Press.

Davenport, T., Mittal, N. (2022). How generative AI is changing creative work. Available at: https://hbr.org/2022/11/how-generative-ai-is-changing-creative-work (Accessed 6th July 2023).

Gunawardena, C. (1995). Social presence theory and implications for interaction and collaborative learning in computer conferences. International Journal of Educational Telecommunications 1(2/3), pp. 147-166.

Hollan, J., Hutchins, E., Kirsh, E. (2000). Distributed cognition: toward a new foundation for human-computer interaction research. ACM Transactions on Computer-Human Interaction 7(2), pp. 174-196.

Hou, Y., Yang, W., Sun, Y. (2017). Do pictures help? The effects of pictures and food names on menu evaluations. International Journal of Hospitality Management 60, pp. 94-103.

Ivanov, S. (2021). Robonomics: The rise of the automated economy. ROBONOMICS: The Journal of the Automated Economy 1.

Keyes, R. (2004). The Post-Truth Era: Dishonesty and Deception in Contemporary Life. New York: St. Martin’s Press.

Lazard, A., Bock, M., Mackert, M. (2020). Impact of photo manipulation and visual literacy onconsumers’ responses to persuasive communication. Journal of Visual Literacy 39(2), pp. 90-110.

Leetaru, K. (2019). Deep fakes are merely today’s photoshopped scientific images. Available at: https://www.forbes.com/sites/kalevleetaru/2019/08/24/deep-fakes-are-merely-todays- photoshopped-scientific-images/ (Accessed June 21st 2023).

Plangger, K., Campbell, C. (2022). Managing in an era of falsity: Falsity from the metaverse to fakenews to fake endorsement to synthetic influence to false agendas. Business Horizons, 65(6), pp. 713-717.

Tuomi, A., Tussyadiah, I., Ling, E.C., Miller, G., Lee, G. (2020). x=(tourism_work) y=(sdg8) while y=true: automate (x). Annals of Tourism Research 84, 102978.

Tuomi, A. (2021). Deepfake consumer reviews in tourism: Preliminary findings. Annals of Tourism Research Empirical Insights, 100027.

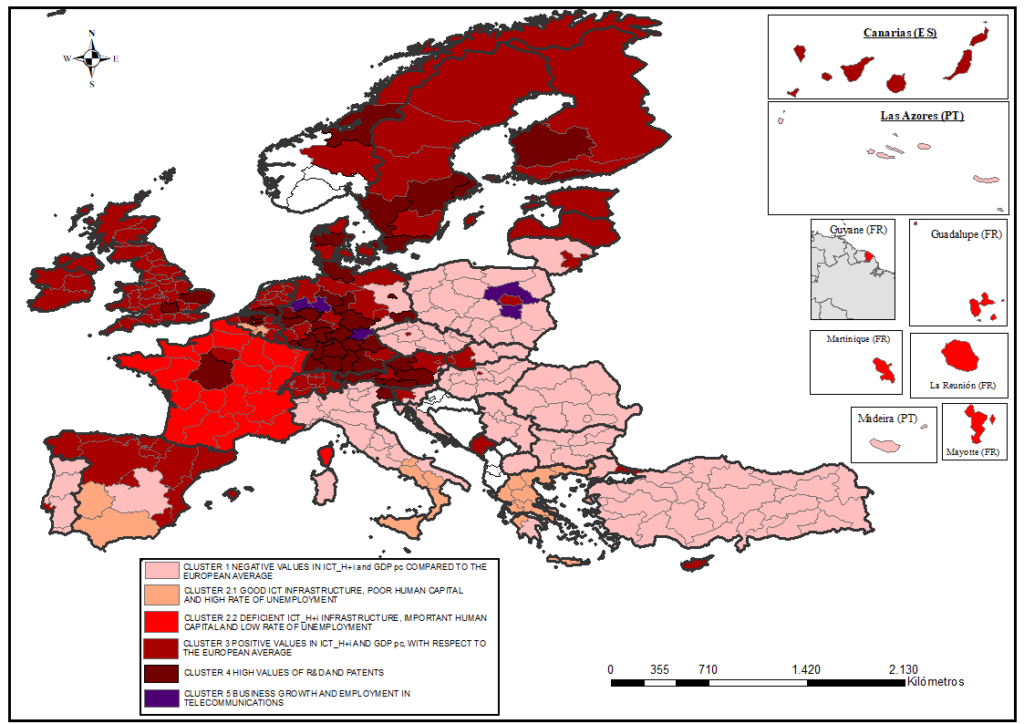

Information society and socio-economic characteristic in Europe: a typology of regions

Purificación Crespo-Rincón

purcrerin@alum.us.es

Rosa Jordá-Borrell

Francisca Ruiz-Rodríguez

Universidad de Sevilla, España

Keywords: Information and communication technologies (ICTs), households and individuals (H+i); socioeconomic and technological factors, NUTS 2.

Introduction

The digital development of a region is considered as the process that drives the transformation of the region towards the information and knowledge society, and makes it possible to modify conditions through the generation and processing of information and knowledge in order to improve competitiveness, innovation and the adoption of information and communication technologies (ICTs) (Gónzalez-Relaño, R. et al., 2021; Hernández & Maudos, 2021).

ICT access and usage has given rise to the so-called digital society. In this society, the public, companies and institutions establish their relationships (social, administrative, employment, consumption, etc.), while also generating data and information via digital devices and platforms, giving rise to digital development (Ruiz-Rodríguez et al., 2018). Therefore, digital technologies are a constant component of the lives of persons and companies, with these being dependent on digital technologies and their specific infrastructures.

At present, empirical research on the information and communication society at the NUTS 21 regional level in the European Union (EU) is insufficient, especially the analysis of its relationship with the socioeconomic environment. Moreover, when this topic has been addressed, studies have focused above all on the factors that determine basic digital development (Cruz-Jesús et al., 2016). Identification of this research gap has led to the proposition of the following hypotheses, with their corresponding research questions:

- The differences between regions in terms of Internet access for the European population are small, as EU and national government policies on communications equipment and infrastructure have helped to significantly reduce these potential inequalities. This raises the question: Are regions with good telecommunications infrastructure coverage, such as the availability of broadband, those that most use advanced ICTs?

- The distance between regions, in terms of the use of social networks by the population, is small, while the dissimilarities between regions in the use of advanced ICTs are important. Are these latter differences associated with the socio-economic characteristics of the regions? Which socio-economic factors or elements are more important? Is the innovation and business capacity of a region associated with the advanced use of ICTs by the population of that region?

In this context, the objective of this study is, firstly, to identify the socio-economic (related to training, enterprise and regional wealth) and technological (innovation, digitalisation, etc.) factors that may define the underlying structure of the digital development of households and individuals; and secondly, to characterise the socio-economic environments that are associated with advanced ICT usage by households and individuals (ICT_H+i) at the NUTS 2 regional level in Europe.

Theoretical framework

In recent decades, the widespread diffusion of ICTs has led to a transformation of the world into an information society. Thanks to ICT infrastructure and equipment, individuals and governments now have much better access to information and knowledge than ever before in terms of scale, scope and speed.

The rapid advancement of ICTs worldwide is arousing the interest of many researchers, motivating them to focus their research on the impact of ICT diffusion on socioeconomic growth. According to Stanley et al. (2018), the socioeconomic characteristics of a region may or may not favour advanced ICT access and usage in that region. In this context, prominent modern theories such as neo-Schumpeterian theories (Schumpeter & Nichol, 1934; Malacarne, 2018; Garbin & Marini, 2021) and neoclassical growth theory have pointed out the positive relationship between the socioeconomic characteristics of a territory/region and the development of technology, including ICTs.

Consequently, these theories suggest that ICTs are a key input for a region to improve the production process, modernise technology and improve the skills of the workforce. They also mean that competitiveness and complex technology are essential elements of the regional business fabric (Jordá-Borrell, 2021).

Methodology

In order to meet the objectives and verify the hypothesis, the methodology used is as follows:

SCOPE OF THE STUDY